Demystifying AI MCP: The Future of Scalable AI Model Configuration

Demystifying AI MCP: The Future of Scalable AI Model Configuration https://grtlabs.com/wp-content/uploads/2025/06/21c8e096-6645-469a-b537-3591c1abb483_944x733.jpg 944 600 grtlabs https://secure.gravatar.com/avatar/4a481527cdbd04be29afba0d16e3b15f425ccc8fcb89646859adac78c4df1092?s=96&d=mm&r=gIn today’s rapidly evolving digital landscape, deploying artificial intelligence (AI) models at scale demands more than just powerful algorithms and vast data sets. It requires robust frameworks for managing how these models are configured, optimized, and deployed across diverse environments. Enter AI MCP — Artificial Intelligence Model Configuration Protocol — a concept gaining momentum among engineers and AI architects as a foundational approach to streamline AI lifecycle management.

What is AI MCP?

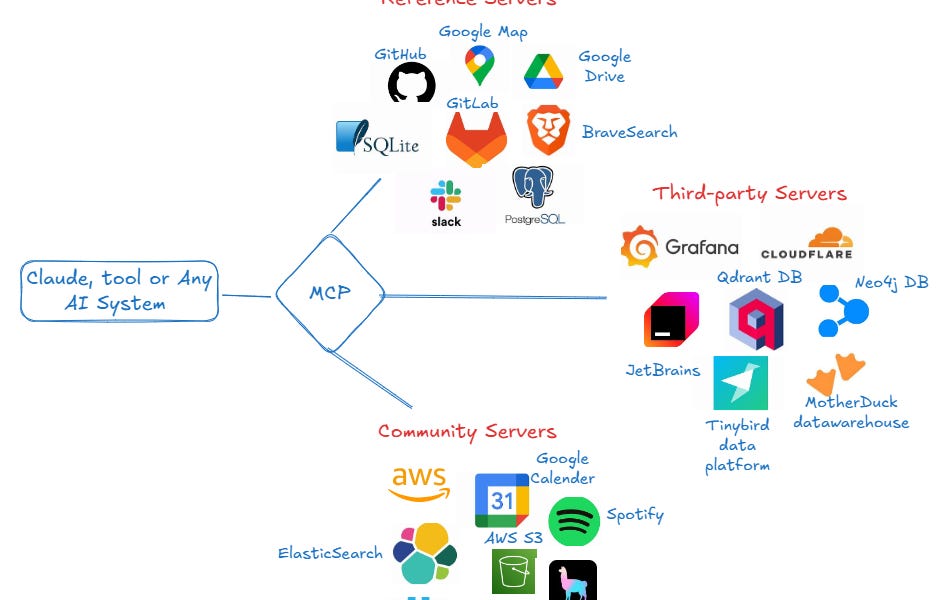

AI MCP (Model Configuration Protocol) refers to a structured framework or set of standards designed to manage the configuration, parameter tuning, and deployment settings of AI models. Think of it as the “blueprint layer” between data science and production environments, ensuring that models are not only well-trained but also well-managed throughout their lifecycle.

At its core, AI MCP aims to solve three primary challenges:

Governance and Compliance: Supports audit trails, version control, and reproducibility, which are vital in regulated industries like healthcare, finance, and defense.

Configuration Drift: Ensures consistency between development, staging, and production environments.

Scalability: Facilitates the deployment of models across multi-cloud, edge, and on-prem infrastructure.

Key Components of AI MCP

A modern AI MCP typically includes:

- Model Configuration Schema: A JSON/YAML-based standard describing hyperparameters, input/output types, dependencies, and pre/post-processing steps.

- Environment Definitions: Metadata for container specs, compute resources, and runtime environment (e.g., Python versions, libraries).

- Versioning and Rollbacks: Built-in mechanisms to version control model configurations and revert to previous stable builds.

- Monitoring and Logging Hooks: Integrated logging endpoints and telemetry for performance and drift detection.

- Security Policies: Configurable rules for access control, encryption, and sandboxing.

Why Does AI MCP Matter?

As organizations increasingly shift toward AI-driven decision-making, the overhead of managing hundreds (or even thousands) of models becomes significant. Without a formal configuration protocol:

- Small misconfigurations can lead to big failures.

- Cross-team collaboration becomes chaotic.

- Debugging model behavior in production becomes a nightmare.

AI MCP introduces a single source of truth for how models are expected to behave, making it easier to detect anomalies, troubleshoot issues, and maintain trust in AI systems.

Real-World Use Cases

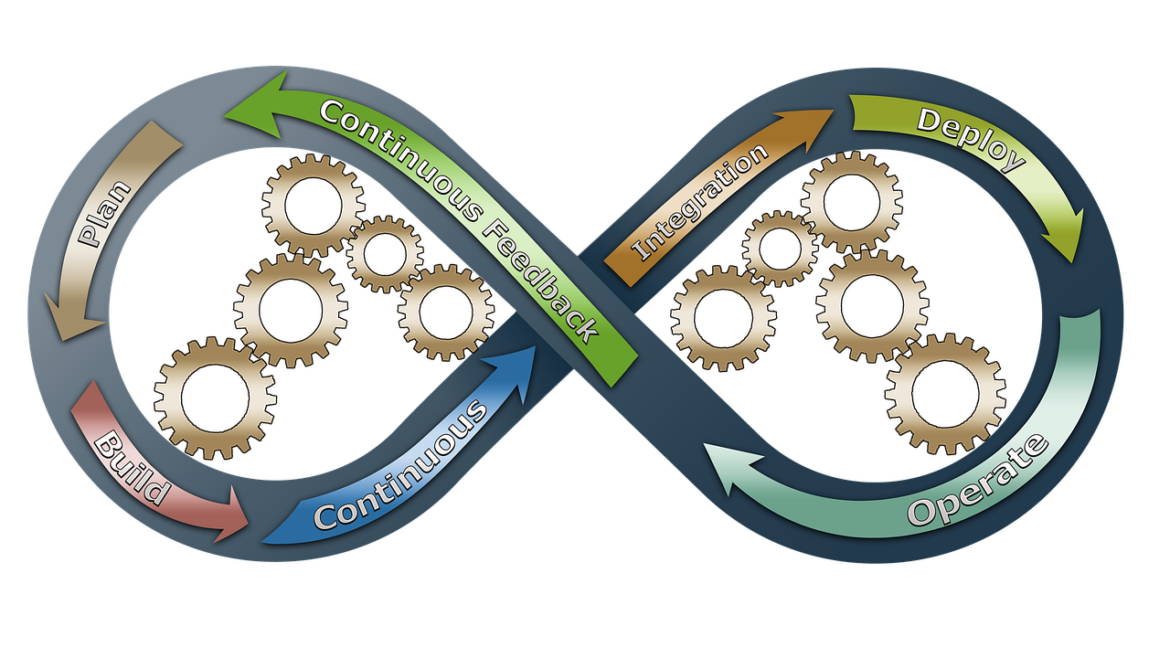

- MLOps Platforms: Tools like MLflow, Kubeflow, and SageMaker use MCP-like structures to define how models are trained and served.

- Edge AI Deployment: When deploying models to edge devices (IoT, mobile), AI MCP helps define lightweight variants and runtime constraints.

- AI Compliance: In regulated industries, AI MCP plays a key role in documenting how a model was trained and what parameters were used — crucial for audits.

Looking Ahead: The Standardization of AI MCP

The future of AI MCP lies in standardization. Just like OpenAPI changed how we describe REST APIs, we may soon see an open standard for AI MCP that’s universally adopted. This would unlock better interoperability between platforms, simplify onboarding for new AI engineers, and improve the reliability of model deployments.

Final Thoughts

AI MCP is not just a technical add-on; it’s a necessity for any organization serious about operationalizing AI at scale. By treating model configurations with the same rigor as application code or infrastructure, teams can build AI systems that are not only powerful, but also dependable, explainable, and secure.